From Chaos to Clarity: How AI Transforms Data Normalization

Situation: The Overwhelming Reality of Modern IT (Security) Data

In today’s enterprise environments, IT and security teams face a relentless flood of telemetry data from diverse sources—syslog servers, cloud platforms like AWS CloudTrail, network devices, applications, and security tools such as firewalls and Windows Events. This data arrives in wildly different formats: mixed RFC 3164 and RFC 5424 standards, unstructured fields, mismatched timestamps like RFC1123, ISO 8601, Unix, and Syslog, and even dual-timestamp entries.

For large enterprises, that means ingesting anywhere from tens to hundreds of terabytes of data daily from over 500 sources—where logs from sshd, snmpd, radiusd, kernel, and sudo differ wildly in format and structure. Without effective normalization, these logs fragment into silos, crippling visibility across multi-cloud and hybrid environments.

Complication: The Chaos Hindering Effective Analysis

The real challenge isn’t a lack of data—it’s the lack of consistency:

- Timestamps missing time zones or using conflicting formats.

- Malformed entries that can’t be parsed.

- Critical fields like hostnames, usernames, IPs, and protocols buried in noisy, unstructured text.

Traditional normalization methods—manual Grok rules, regex scripts, and fragile custom parsers—can’t scale. They break when vendors update firmware or when a new log format appears. The result: ingestion delays, parsing errors, incomplete serialization, and wasted storage on low-value logs.

For security and DevOps teams, troubleshooting becomes an archeological dig—sifting through 2,000+ log entries per second—turning urgent alerts into hours-long hunts.

Implication: Costly Delays and Risks for Organizations

Without proper data normalization, raw, inconsistent logs slow detection, inflate costs, and weaken security posture:

- Slower detection – Inconsistent formats force analysts to manually reconcile data, increasing MTTR by up to 42% and allowing threats to escalate.

- Storage waste – Premium SIEM platforms like Splunk or Elastic ingest redundant, unoptimized logs, draining budget on storing noise instead of insight.

- Alert fatigue – Poorly normalized data creates duplicate or misleading alerts, fueling a 40% increase in missed or delayed responses.

- Compliance risks – Fragmented, siloed logs obscure data lineage, making audits harder and potentially non-compliant.

In short, lack of normalization turns logs into a liability—raising operational costs, eroding productivity, and amplifying breach risk.

Normalization Gaps = Higher Costs, Slower Response, Greater Risk

Without proper normalization, inconsistent log formats slow detection, bloat SIEM storage costs, trigger misleading alerts, and create compliance blind spots. These gaps erode trust in the data—so when a ransomware attack begins encrypting systems, teams waste precious minutes reconciling conflicting information instead of stopping the threat. The result: higher costs, longer recovery, and greater breach impact.

Position: Observo AI’s AI-Driven Normalization Pipeline as the Solution

Observo AI eliminates this complexity with an intelligent, scalable normalization pipeline that transforms heterogeneous telemetry into standards-aligned formats like Splunk CIM and OCSF.

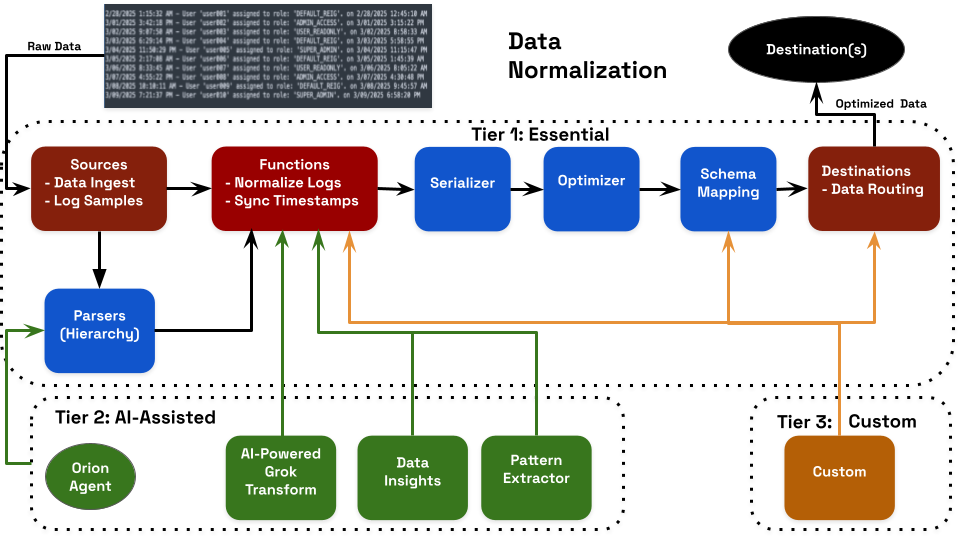

At the heart of Observo AI lies a robust platform that integrates powerful components—including AI-optimized parsing, timestamp synchronization, log normalization, AI-powered Grok transform, data insights, pattern extraction, and schema mapping—all optionally orchestrated by the intelligent Orion AI Agent. This seamless orchestration powers our flexible three-tier approach, empowering users to apply AI assistance exactly where it's most impactful, while retaining full control and unlocking maximum automation efficiencies.

Our three-tier model ensures both instant value and deep customization:

- Tier 1 – Essential: Comprehensive out-of-the-box normalization covering 80% of common data sources, featuring intelligent Sources for streamlined ingestion and log sampling, advanced Parsers for format detection, Functions for automated timestamp synchronization and log normalization, efficient Serializers for output formatting, performance Optimizers, automated Schema Mapping to industry standards, and intelligent Destination-based Data Routing based on proven best practices.

- Tier 2 – AI-Assisted: Leverages machine learning and the Orion AI Agent to enhance normalization through pattern recognition, field extraction, optimization, and smart routing. This tier automates complex tasks, detects subtle anomalies, and adapts to evolving data structures.

- Tier 3 – Custom: Lua scripting and bespoke schema support for proprietary formats, complex JSON, compliance-critical metadata preservation and API extensions to address specialized data routing.

In the diagram, red is required, blue is optional, green indicates AI-Assisted and orange indicates Custom.

Observo AI simplifies data processing with a series of powerful components:

- Sources: The Sources component handles data from over 500 sources, collecting up to 100 PB per month, with support for custom log sampling and timestamped event generation capabilities.

- Parsers: The Parser component ensures 80% of data sources work "out-of-the-box". It includes pre-built parsers and AI assistance to reduce manual parser selection and configurations.

- Functions: This component normalizes heterogeneous log data to enhance processing efficiency and maximize downstream analytics value. By leveraging AI-driven assistance through the Orion Agent, it delivers intelligent data normalization with advanced customization capabilities, including Lua scripting support for specialized log processing requirements. The integrated timestamp synchronization functionality enables precise cross-system event correlation by harmonizing diverse timestamp formats—from RFC1123 and ISO 8601 to Unix and Syslog—utilizing AI-powered Grok Transform patterns to accurately process even the most complex temporal data structures.

- Serializers: This component prepares data for analytics platforms. It offers vendor-specific formatting, multi-platform compatibility, and a template library.

- Optimizers: The component reduces storage costs by 74% by dropping unnecessary data.

- AI-Powered Grok Transform: This component automatically generates patterns and detects formats, so you don't have to write regex manually.

- Pattern Extraction: This component uses event clustering and anomaly detection to reduce noise and improve visibility.

- Data Insights: This component provides real-time analytics, cardinality analysis, and optimization suggestions. It streamlines normalization and boosts performance and security

- Schema Mapping: This component ensures seamless compatibility with analytics platforms like Splunk by automatically mapping data schemas. Leveraging AI assistance alongside the Orion Agent to accelerate integration by aligning extracted and enriched log fields to standardized schemas like Splunk’s Common Information Model (CIM) and the Open Cybersecurity Schema Framework (OCSF). It supports unique customization such as Lua scripting to address specialized schema mapping.

- Destinations: This component intelligently delivers data to the most appropriate destinations—whether high-performance analytics platforms like Splunk for real-time insights or cost-efficient storage like AWS S3 for long-term retention—while enabling simultaneous delivery to multiple endpoints. It supports unique customization of API extensions to address specialized data routing.

Action: Steps to Implement Observo AI and Achieve Clarity

- Ingest & Connect – Onboard sample or production logs from any supported source or protocol—whether file upload, API, agent, collector, or streaming pipeline—to establish a live data flow.

- Deploy Tier 1 Essential – Skip complex data prep—built-in normalization features provide immediate operational insights with simple, low-touch setup.

- Leverage Tier 2 AI Intelligence – Utilize assisted AI capabilities—including AI-Powered Grok Transform, Data Insights, and Pattern Extraction—to enhance essential components—Parsers (Hierarchy), Functions that enhance log normalization & timestamp synchronization, and Schema Mapping. Orchestrated optionally by the Orion AI Agent, this approach optimizes data fidelity and significantly reduces manual effort.

- Extend with Tier 3 Customization – Implement advanced mappings, workflows, and regulatory controls tailored to unique formats and compliance mandates.

- Prioritize High-Value Sources – Start with business-critical systems such as CloudTrail, core network telemetry to deliver early ROI and showcase performance gains.

- Expand & Automate – Implement a phased approach to ingesting data from all supported sources, using standard normalization to optimize processing efficiency throughout scaling.

Benefit: Tangible Gains in Efficiency, Security, and Savings

- 98%+ field accuracy in normalized datasets, ensuring reliable downstream analytics and threat detection.

- 42% faster MTTR through immediate, accurate event correlation.

- 65–80% faster query performance in SIEMs and data lakes from consistent schema and reduced parsing overhead.

- Up to 90% fewer false positives via standardized fields and enriched context.

- 74%+ storage cost reduction by routing critical logs to SIEM and routine logs to low-cost storage — without losing normalization fidelity.

- >500 source types unified under open schemas (Splunk CIM, OCSF) without manual mapping.

- Hundreds of terabytes processed daily without compromising speed, accuracy, or compliance.

- 85% faster onboarding and streamlined workflows across security and DevOps teams.

- Days-to-hours source integration through pre-trained normalization models and essential mappings.

Case Study Spotlight: How a Retail Giant Transformed Data Chaos into Security Clarity

One of the world's largest retailers struggled with fragmented data from thousands of stores and cloud services. Their SOC was drowning in inconsistent logs from POS systems, inventory platforms, and security tools with mixed timestamp formats, varied syslog structures, and unstructured event data—making correlation nearly impossible and investigations painfully slow.

After deploying Observo AI's normalization platform:

- MTTR fell 45% as analysts could finally correlate events across systems with standardized timestamps and unified field structures.

- Threat detection accuracy improved 35% through AI-assisted parsing that extracted critical fields like usernames, IPs, and protocols from previously unstructured logs.

- Data consistency achieved 95% across all sources, eliminating the manual effort to decode mixed RFC formats and dual-timestamp entries.

- Onboarding time was cut from weeks to days, with 80% of their diverse log sources normalized immediately using Essential templates.

This transformation turned their SOC from manual data wrangling to proactive threat hunting—analysts now spend time analyzing threats instead of formatting data. The normalized, enriched telemetry enabled accurate cross-system correlation that was previously impossible with their chaotic data landscape.

For more information on the promise of an AI-native data pipeline, read The CISO Field Guide to AI Security Data Pipelines.