Enrich & Deliver: Context-Ready Logs

Situation: The Data Deluge Challenge

Modern security operations are drowning in data but starving for intelligence. Organizations process terabytes of raw telemetry daily from a wide variety of sources, including firewalls, endpoints, and cloud services, yet 80-90% lacks the contextual intelligence needed for effective threat detection and response. This torrent of data creates a major challenge for Security Operations Centers (SOCs).

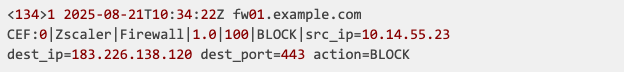

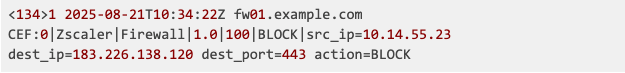

For example, a typical Zscaler NSS firewall log provides basic network metadata but lacks critical context:

This structured entry shows a source IP, destination IP, port, and action, but doesn't answer crucial questions such as:

- Is 183.226.138.120 associated with malicious C2 infrastructure?

- Does this traffic pattern indicate command-and-control communication?

- What is the criticality of the affected asset?

- Is the user exhibiting anomalous behavior?

This absence of actionable context—not the data volume itself—is the core issue facing both IT and security teams.

Complication: Context-Poor Telemetry at Scale

Traditional SOC workflows compound this challenge through fundamental operational limitations:

- Manual Investigations: Analysts perform time-consuming manual lookups that delay response times by up to 42%, leaving organizations vulnerable to escalating threats

- Generic Detection Rules: Cookie-cutter detection logic generates excessive false positives, creating alert fatigue and causing analysts to miss legitimate threats

- Reactive Analysis: Post-incident analysis misses early attack indicators, increasing Mean Time to Response (MTTR) significantly

- High SIEM Costs: Without intelligent filtering, organizations ingest and store massive volumes of low-value data in premium SIEM platforms, draining budgets on noise instead of insights

The result is a security operation perpetually behind the threat curve—troubleshooting becomes a forensic excavation through thousands of log entries per second, while inconsistent log formats cripple visibility across multi-cloud and hybrid environments.

Implication: Costly Delays and Compounding Risks

The lack of context in security logs creates cascading operational and business risks:

Operational Impact:

- Delayed Detection: Manual investigations and context-poor data significantly delay incident response, allowing threats to escalate

- Resource Waste: Analysts spend 60-70% of their time on manual triage rather than strategic threat hunting

- Alert Fatigue: Generic detection rules overwhelm teams with false positives, causing real threats to be deprioritized or missed entirely

Financial Impact:

- Premium SIEM Costs: Organizations pay premium rates to store and process low-value telemetry that should be filtered or archived

- Operational Inefficiency: Extended investigation times multiply the cost of every security incident

- Opportunity Cost: Security teams remain reactive rather than proactive, missing chances to prevent attacks

Strategic Risk:

- Compromised Security Posture: Reliance on reactive analysis means consistently missing early attack indicators

- Competitive Disadvantage: Organizations maintaining status quo approaches fall further behind threat actors who continuously evolve their techniques

The opportunity cost compounds daily—every hour of delayed enrichment implementation represents missed threats, wasted analyst time, and unnecessary SIEM expenses.

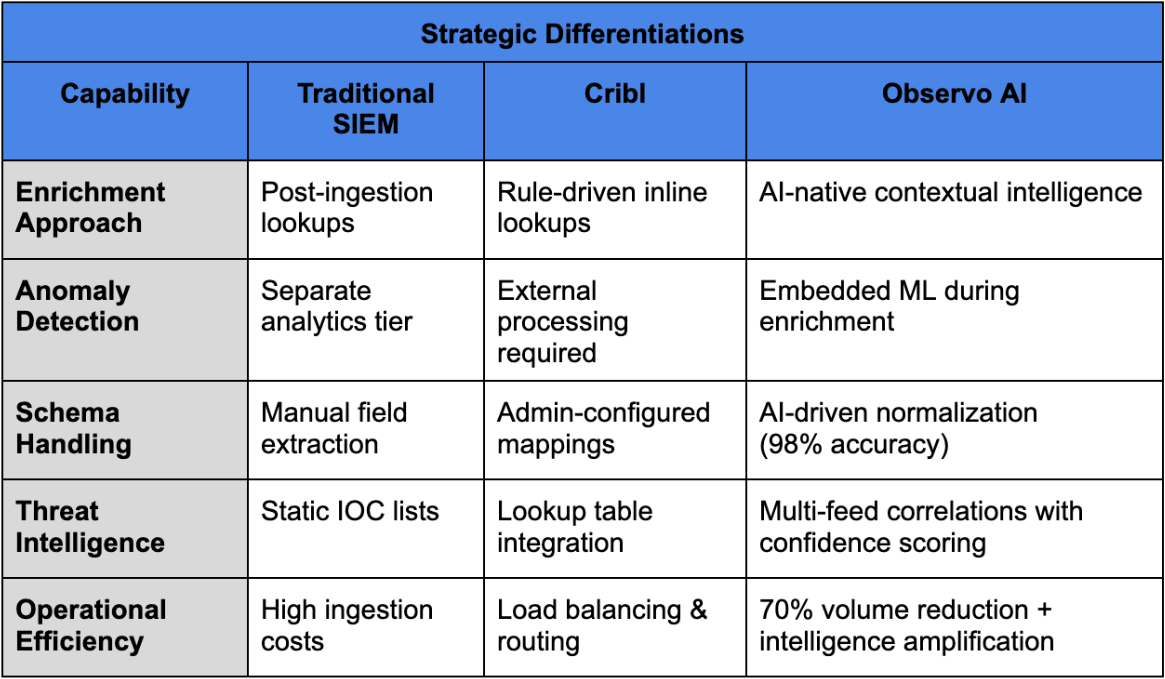

Position: Intelligence-Driven Security Operations

Observo AI fundamentally reimagines security telemetry processing by positioning an intelligence amplification layer between raw data sources and SIEM platforms. Unlike traditional approaches that focus on data plumbing or post-ingestion lookups, Observo AI delivers AI-native contextual intelligence that transforms raw logs into decision-ready insights at the point of ingestion.

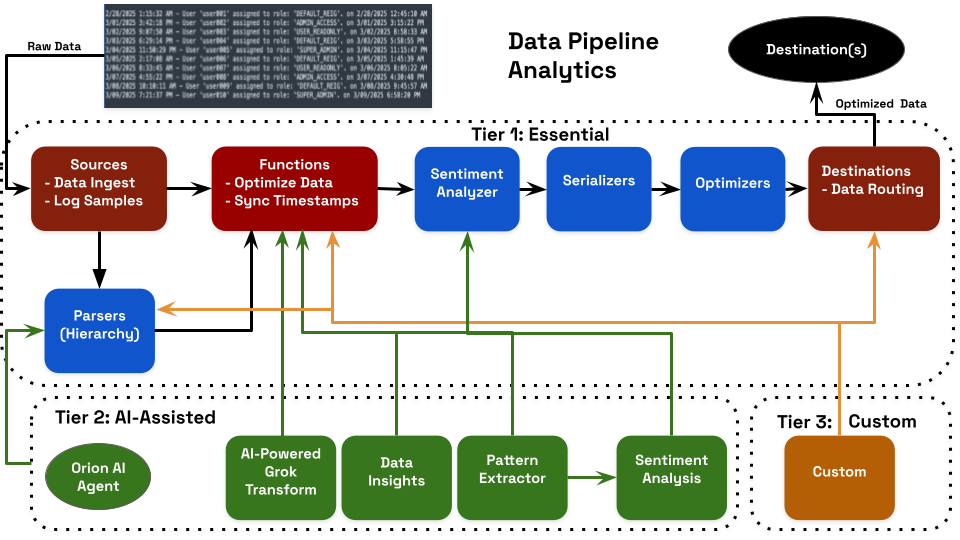

The platform operates through a flexible three-tier architecture:

- Essential (Tier 1): Out-of-the-box normalization for 80% of common sources with low-touch setup

- AI-Assisted (Tier 2): Machine learning and Orion AI Agent for pattern recognition, anomaly detection, and intelligent optimization recommendations

- Custom (Tier 3): Lua scripting and bespoke schema support for proprietary formats, reducing manual effort by up to 85%

Our enrichment engine operates through a comprehensive two-tier lookup framework combining static organizational data with dynamically updated intelligence sources. Static reference tables maintain persistent organizational context including asset criticality hierarchies, user privilege classifications, and compliance boundary definitions, while dynamic lookup capabilities enable real-time querying of continuously updated datasets including vulnerability scan results and behavioral baselines.

The platform incorporates a high-performance threat intelligence matching capability processing multiple concurrent feed sources including commercial platforms (Abuse.ch URLhaus, SSLBL), open source indicators (AlienVault OTX, GreyNoise), industry sharing consortiums, and proprietary research. Pattern matching algorithms generate patterns that instrument confidence scores by correlating indicators against known threat behaviors, reputation databases, and behavioral baselines.

Action: Implementing the Four-Stage Intelligence Workflow

Observo AI's pipeline operates through four integrated stages that transform raw telemetry into analytics-ready intelligence:

Stage 1: Intelligent Filtering & Optimization

- AI-assisted recommendations: Pattern extraction identifies optimization opportunities and suggests filter improvements

- Transform-driven filtering: Automatically drops low-value events (routine ALLOW traffic, background scans)

- Cost-aware routing: Mission-critical enriched events to SIEM, bulk data to low-cost object storage

- Sampling policies: Maintains forensic value while reducing volume by 70-80%

Stage 2: Normalization & Intelligent Parsing

- AI-powered Grok transforms: Auto-generate parsing patterns for mixed log formats, reducing manual regex maintenance

- Schema standardization: Map to vendor-agnostic schemas (CIM/OCSF-compatible) for immediate SIEM usability

- Timestamp harmonization: Unify mixed time formats (ISO 8601, Unix epoch, RFC 1123) to canonical @timestamp

- Field mapping: Consistent field names across diverse data sources, achieving 98%+ field accuracy

Stage 3: Multi-Layer Intelligence Enrichment

Events are enriched through comprehensive intelligence layers:

- Threat Intelligence: IOC matching against Abuse.ch (URLhaus, SSLBL), AlienVault OTX, GreyNoise, and Feodo Tracker with reputation scoring and malware attribution

- Geolocation & Network Context: MaxMind GeoLite2 integration providing country, ASN, hosting provider, and routing metadata

- User & Asset Context: IAM integration for privilege levels, CMDB connectivity for asset criticality, vulnerability management for current exposure scores

- Behavioral Analytics: Anomaly detection, baseline deviations, and risk scoring based on pattern correlation against known threat behaviors

Stage 4: Aggregation & Risk Scoring

- Temporal correlation: Groups related events within sliding time windows such as brute force attempts within 5 minutes

- Cross-source correlation: Links network, endpoint, and identity events to create multi-dimensional incident graphs

- Risk calculation: Multi-factor scoring weighing threat intelligence, asset criticality, user risk, and anomaly severity

- Incident formation: Converts event clusters into actionable incidents with automated prioritization

Real-World Transformation Example:

Raw Zscaler NSS Log:

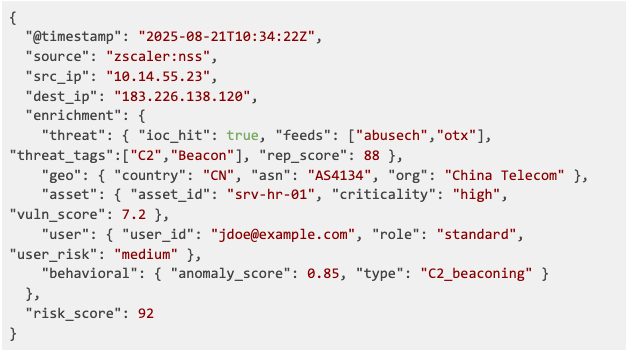

Enriched Output:

This transformation converts a basic blocked connection into a high-priority C2 beacon incident with complete contextual intelligence, enabling immediate containment actions.

This four-stage workflow transforms noisy, heterogeneous telemetry into analytics-ready, context-rich security events that dramatically reduce analyst workload while improving detection accuracy. The result is a scalable intelligence amplification layer that bridges the gap between raw data ingestion and actionable security insights.

Benefit: Measurable ROI and Strategic Security Transformation

Observo AI delivers quantifiable improvements across detection performance, cost optimization, and security posture:

Detection Performance Enhancement:

- 60-80% reduction in Mean Time to Detection (MTTD) through contextual intelligence

- 70% reduction in false positives via multi-source correlation and confidence scoring

- 40-50% improvement in Mean Time to Response (MTTR) through automated incident prioritization

Cost Optimization:

- 50-75% reduction in SIEM ingestion and storage costs through intelligent filtering and routing

- 70-80% decrease in storage requirements by routing low-value data to cost-effective storage

- 30-40% reduction in analyst workload through automation and intelligent prioritization

Strategic Security Transformation:

- Proactive Threat Hunting: Rich contextual data enables hypothesis-driven investigation rather than reactive alert triage

- Automated Response: High-confidence enrichment triggers immediate containment actions through SOAR integration

- Cross-Team Value: Enriched data simultaneously serves Security, DevOps, and Compliance teams with unified schemas

- Future-Ready Operations: Intelligence-first approach positions organizations ahead of evolving threat landscapes

Competitive Advantage: Organizations implementing Observo AI gain fundamental operational efficiency that frees security teams to focus on strategic threat hunting rather than manual log analysis. This evolution from reactive log analysis to proactive, intelligence-driven operations represents the future of cybersecurity—and Observo AI makes that future available today.

The cybersecurity landscape demands this strategic shift. Organizations that continue relying on raw telemetry analysis will find themselves perpetually behind the threat curve, missing critical attack indicators while drowning in alerts. Observo AI transforms this challenge into competitive advantage, delivering precise, actionable intelligence that enables truly proactive security operations.

To learn more about how AI-native data pipelines can enrich security data, read The CISO Field Guide to AI Security Data Pipelines.